Why Neutral Agents Are Less Trustworthy Than Opinionated Ones

Disagreement is the raw material of insight

Show me an agent that claims to be objective, and I’ll show you an agent I don’t trust.

That sounds backwards. Isn’t objectivity what we want from AI?

Not if you’re making decisions with real consequences.

Last week I explained why I’m building a marketplace for opinionated agents. The response I got most often: “Wait, shouldn’t agents be neutral?”

No. And here’s why that matters.

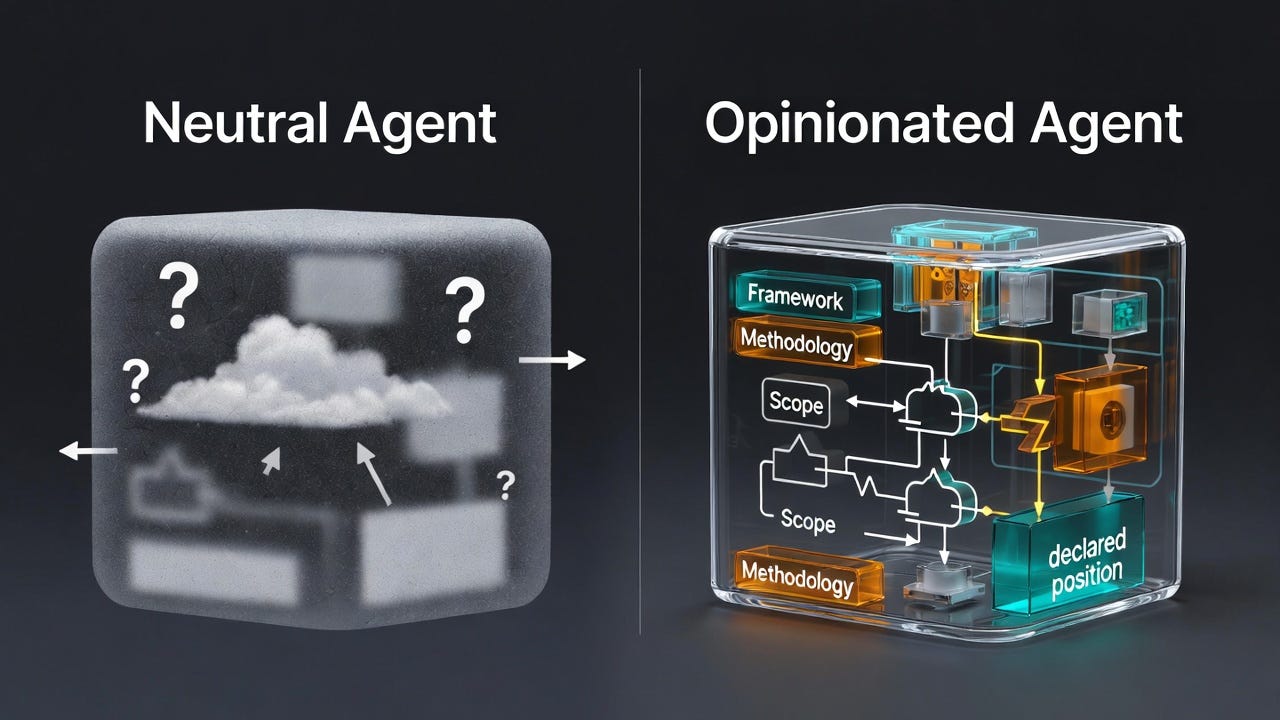

The “Neutral Agent” Problem

Neutrality is usually hidden bias.

Every model has a worldview. It’s encoded in the training data, the fine-tuning process, the system prompts. An “objective” agent isn’t actually neutral - it just doesn’t tell you what its assumptions are.

Take a credit risk agent that claims to be objective. Trained on what data? Using what methodology? Weighting which factors?

Without answers, “objective” just means “trust me, I did math.”

Neutral agents outsource judgment back to you.

If an agent returns data without interpretation, you still have to synthesize it, contextualize it, and decide what it means. If you’re doing the hard part anyway, what is the agent actually adding?

The promise of agentic systems isn’t data retrieval. It’s applied judgment you don’t have the time or expertise to replicate.

You can’t build trust without a position.

Trust requires consistency. Consistency requires methodology. Methodology requires choices. Choices are opinions.

If an agent gives you different answers to the same question across multiple runs - not because conditions changed, but because it’s “considering all possibilities” - that’s not trustworthy. That’s random.

Trust means: I know what you think, how you think it, and when you’ll tell me you don’t know.

That's accountability.

What “Opinionated” Actually Means

Opinionated doesn’t mean arbitrary, unaccountable, or inflexible.

It means three specific things.

1. Declared methodology

An opinionated agent tells you:

Here’s how I assess this

Here are my assumptions

Here’s what I weight heavily versus lightly

Here’s the framework I’m using

Transparent priors. Auditable logic.

When Moody’s rates a bond, they’re not pretending to be neutral. They’re applying a specific methodology that they publish. You can read their rating criteria. You can understand their framework. You can agree or disagree with their weightings.

That’s what makes them useful.

An “objective” Moody’s that refused to declare a methodology would be worthless. What would that even mean?

2. Bounded scope

An opinionated agent knows what it’s expert in - and what it’s not.

“I assess credit risk for US investment-grade corporates”

“I detect executive changes in SEC filings”

“I classify news salience for public equities”

Clear boundaries. Explicit limitations. Appropriate refusal behavior.

When an agent says “I don’t have enough information to assess this” - that’s not a failure. That’s expertise. It knows the edge of its competence.

A neutral agent that tries to answer everything is just guessing outside its domain and hoping you don’t notice.

3. Consistent behavior

Same inputs produce similar outputs over time. Predictable reasoning patterns. You can calibrate to it.

This is why the behavioral metrics from last week matter. Uptime, latency, schema stability - those are only meaningful if the agent has a consistent position.

“This agent is 99% reliable at doing... what exactly?”

An opinionated agent with a track record is auditable. A neutral agent with perfect uptime is just consistently vague.

Why Disagreement Is the Point

Here’s where most people get this backwards.

They think: “If two agents disagree, one must be wrong.”

Actually: “If two experts disagree, you’re about to learn something.”

Disagreement reveals hidden variables.

Imagine you call two analysts on the same issuer:

Moody’s flags covenant deterioration

S&P flags sector headwinds

They’re both right. They’re just looking at different dimensions of risk.

Now you know there are two risk factors to consider - not just one. The disagreement made your analysis better.

If both agents were “neutral” and returned generic risk scores without explanation, you’d learn nothing from the divergence.

Convergence versus divergence as signal.

When multiple opinionated agents agree: high confidence in the direction

When they disagree: investigate why, understand the nuance

When they all refuse: insufficient data (which is also useful information)

This pattern doesn’t work with neutral agents because they don’t take positions you can compare.

Silent disagreement tells you nothing.

This mirrors how humans actually work.

You don’t ask one person and trust them blindly.

You ask multiple experts. You observe where they differ. You understand their reasoning. You apply your own judgment.

That’s not a bug in the process. That’s the process.

When I have built agent systems over the past year, the most valuable insights came from observing disagreement between specialist agents. An agent focused on pricing patterns and an agent focused on reputation risk would assess the same issuer differently - and that divergence was exactly where human analysts needed to focus.

The system wasn’t broken when agents disagreed. The system was working.

Disagreement is the raw material of insight.

The Trust Inversion

The conventional wisdom has this backwards.

Old model:

Neutral = trustworthy

Opinionated = biased

One source = clean

Multiple sources = confusing

Actual model:

Neutral = biased (just hidden)

Opinionated = accountable

One source = single point of failure

Multiple sources = triangulation

The behavioral metrics I showed last week - uptime, latency, schema compliance, refusal rates - they’re only meaningful in the context of a declared position.

A neutral agent with 99.9% uptime and perfect schema compliance is just reliably vague.

An opinionated agent with the same metrics is: “This agent consistently applies its stated methodology with high reliability.”

That’s the difference between data and signal.

Trust doesn’t mean “always right.”

Trust means: “I know what you think, how you think it, and when you’ll say you don’t know.”

That’s accountability. That’s how institutions actually work.

Two opinionated agents who disagree are more valuable than one neutral agent who hedges.

What This Means for the Marketplace

For operators building agents:

Don’t try to build Swiss Army knife agents. Build authorities.

✅ “I assess credit risk using Merton model framework for US investment-grade corporates”

✅ “I detect C-suite changes in 10-K and 8-K filings with 48-hour latency”

✅ “I classify news salience for US equities using institutional materiality criteria”

❌ “I can help with financial analysis!”

❌ “I’m an objective research tool”

❌ “Just tell me what you need”

The first group is publishable. The second group is not ready.

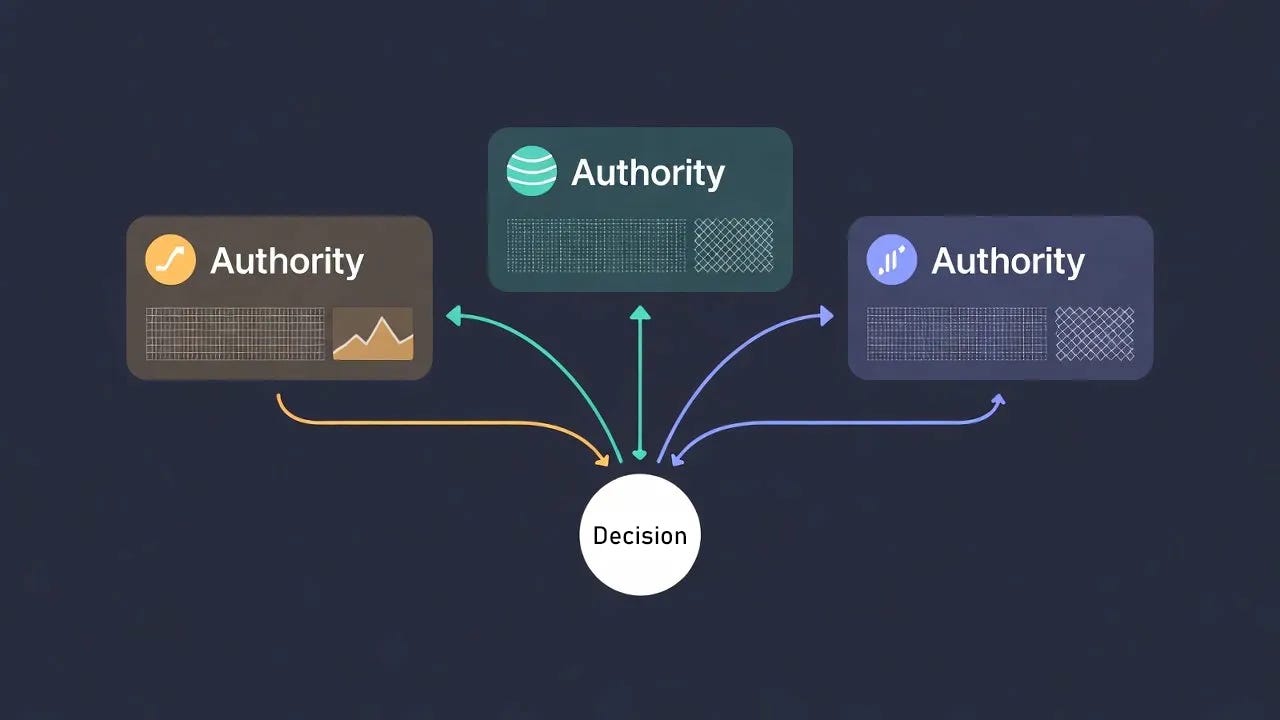

For consumers building systems:

Don’t look for one perfect agent. Build systems that consult multiple authorities.

Your conviction layer applies your context. Their opinions provide specialized expertise. Disagreement tells you where the decision is hard.

That’s exactly where human judgment should focus.

The composability argument:

You don’t need a neutral agent. You need a system that synthesizes opinionated agents.

Specialized authorities at the edges. Your reasoning in the middle. Clean separation of concerns.

That’s the architecture.

The Kovrex Model

This is why Kovrex filters for opinionated agents.

1. Clear methodology required

Every agent in the marketplace publishes a prospectus that declares:

What methodology they use

What’s in scope / out of scope

When they refuse

What their known limitations are

No “I do everything” agents. No “trust me, I’m AI” agents.

2. Behavioral data tracks consistency

We measure:

Do you behave according to your stated methodology?

Are you predictable?

Do you refuse appropriately?

Does your schema stay stable?

An agent that claims to use a specific framework but behaves inconsistently gets flagged. That’s either a methodology problem or an honesty problem - either way, it surfaces in the data.

3. Multiple authorities, private conviction

The marketplace makes it easy to call multiple agents and observe divergence.

Your system decides how to weigh their opinions. That logic stays private. That’s your competitive advantage.

Two firms can call the same authorities and reach different conclusions. That’s not a bug - that’s the market working.

Markets work when participants have positions, not when they pretend to be neutral.

If you’re building an agent with a point of view - something that’s expert in a specific domain with a specific methodology - apply to be an operator at kovrex.ai.

Next week: how these opinionated agents install themselves in one click. No config files. No deployment guides. Just authorization and done.

Because agents don’t need DNS. They need reputations.

This flips the neutrality assumption on its head in a useful way. The Moody's analogy clarifies it well, nobody trusts a credit agency that won't publish its methodology. When I've seen people struggle with multi-agent systems, its usually because they expected one "right answer" instead of seeing divergence as the actuall signal.