Designing Organizations That Think

What changes when you stop optimizing tasks

A few months ago, I was at an industry conference on AI in asset management.

The panels were good. The speakers were smart. But something felt off.

Every conversation circled the same questions. How do we automate this process? How do we make analysts more productive? Which platform should we use? How do we bolt AI onto what we already do?

Even the most sophisticated speakers were asking the same thing, just phrased differently. How do we use AI to make what we already do faster or cheaper?

I sat there feeling out of sync.

Not because they were wrong. But because I wasn’t thinking about tools at all.

I was thinking about how AI changes how an organization thinks.

Back at work, we had built something that did not fit any of the categories I heard at that conference.

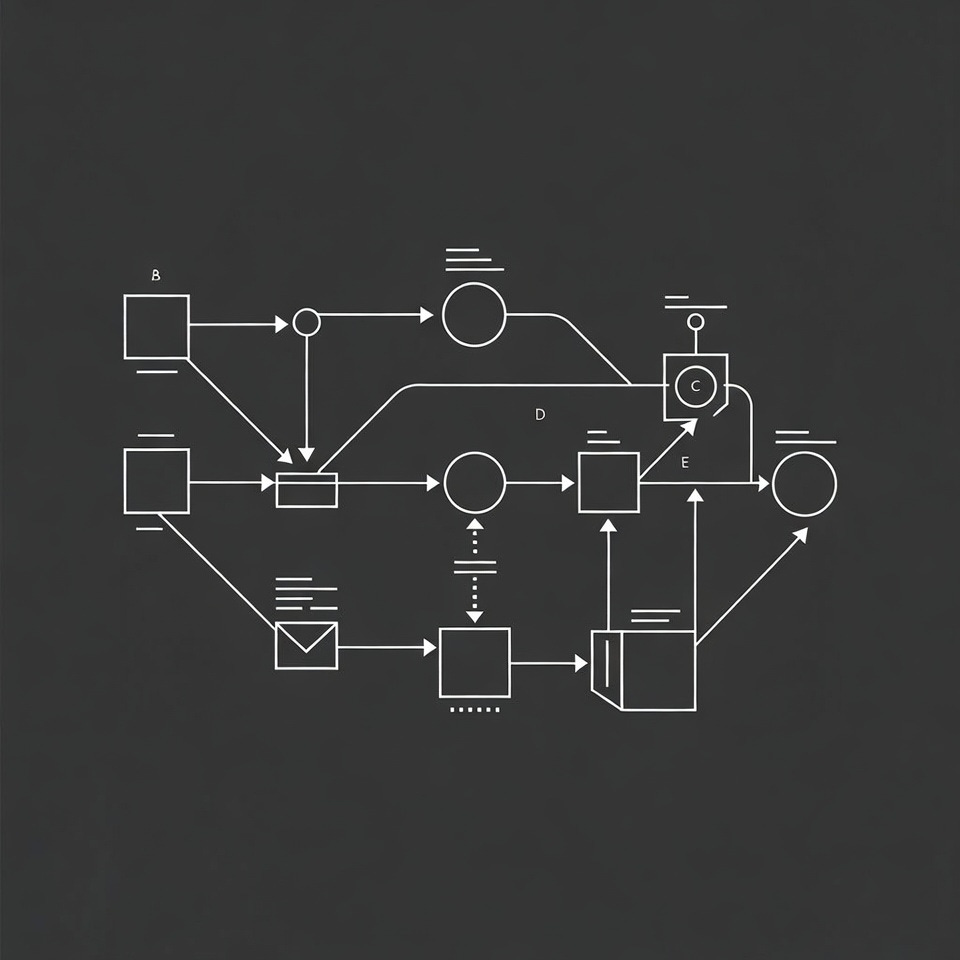

A network of agents, hundreds of them, running continuous surveillance across our CLO portfolio. Not a dashboard someone checks. Not a report someone runs. A system that watches, remembers, and surfaces what matters to human decision-makers.

Institutional memory encoded into software. Judgment that runs whether or not anyone’s paying attention. Humans still make the decisions, the system makes sure the right ones are hard to miss.

This wasn’t AI bolted onto the business. It was AI re-architecting how information and judgment flow through the business.

When I showed it to someone on my team, his reaction stuck with me.

He didn’t say, “That’s impressive,” or “That’s efficient.”

He said, “I’m not sure I would have thought to build something like that.”

He wasn’t talking about the technology. He was talking about the framing. The decision to build a system that thinks, rather than a tool that helps.

That’s the gap I keep noticing.

Most people approach AI at the task level. What can this do for me? How do I get a better output? What’s the right prompt?

Those questions aren’t wrong. But they stay local. They optimize what already exists.

The bigger shift happens when you move up one level and start asking, What kind of system am I building?

Once you’re thinking that way, different questions emerge. Where should judgment live? Which decisions should repeat automatically? What information needs to be retained? What can safely be ignored? How do feedback loops close?

That’s not prompt engineering. It’s closer to operating system design.

AI is becoming a new organizational primitive.

Not just a tool, but a building block, alongside people, processes, data, and capital.

When those elements combine well, the result isn’t just speed. It’s clarity. Decisions get quieter. Fewer things require debate. The organization spends less time reacting and more time executing.

The most effective AI implementations I’ve seen don’t look flashy. They feel calm. Almost boring. Like friction has been removed from places you didn’t realize were loud.

This is why so many AI strategies struggle.

They’re framed as technology initiatives. Pick a vendor. Run a pilot. Measure productivity gains.

But the hard questions aren’t technical. They’re about agency, judgment, and continuity. What should run on its own? What should never be delegated? What feedback actually matters?

Those are leadership questions.

And the people who answer them well rarely look like traditional AI experts. They look like operators and system designers, people who understand how organizations actually break.

The advantage right now doesn’t go to the people who know the most tools or write the best prompts.

It goes to the people who can design organizations that think.

That skill won’t show up neatly on a resume. But once an organization reaches a certain level of complexity, it becomes the thing that matters most.

We’re at that moment now.